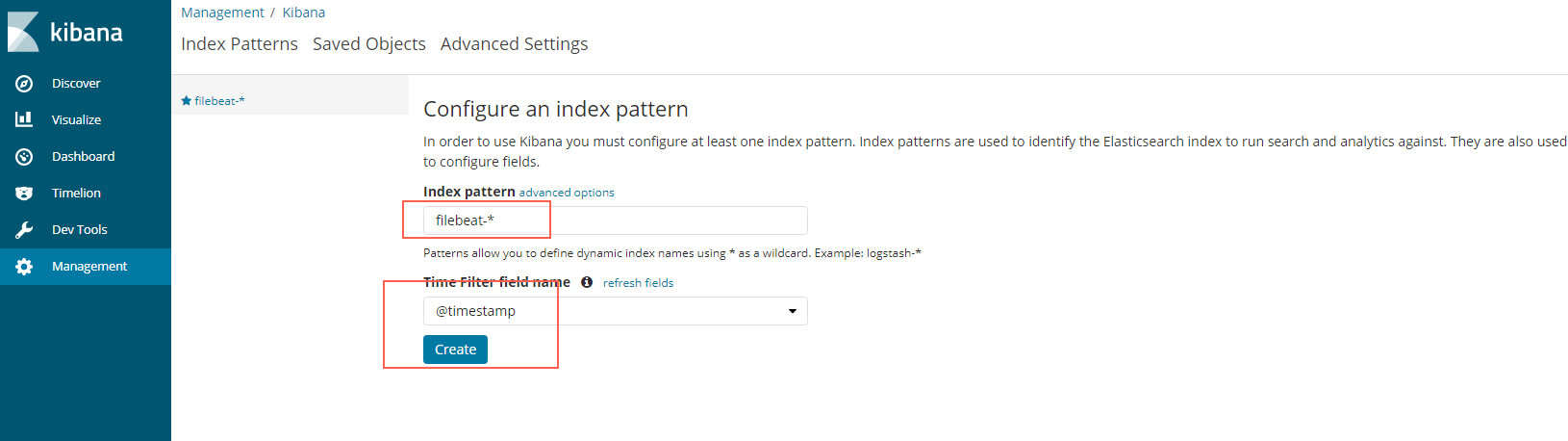

compose/production/elk/Dockerfile FROM sebp/elkx:622ĬOPY. It comes with X-Pack which provides some nice features, one of them is Kibana authentication. We will use the elkx:622 image, which is based on the elk one. I recommend you read their documentation,, and I found an existing ELK image which made things a lot easier, sebp /elk:622 and sebp / elkx :622. We'll start with the ELK side of things and then we'll move on the Nginx configuration. Our new Docker machine will run in a t2.small EC2 instance, we can create it with docker-machine create -d amazonec2 -amazonec2-instance-type t2.small elk-machine-name Logstash will receive, process and send our logs to Elasticsearch and we will be able to visualize them with Kibana.

ELASTIC SEARCH FILEBEATS INSTALL

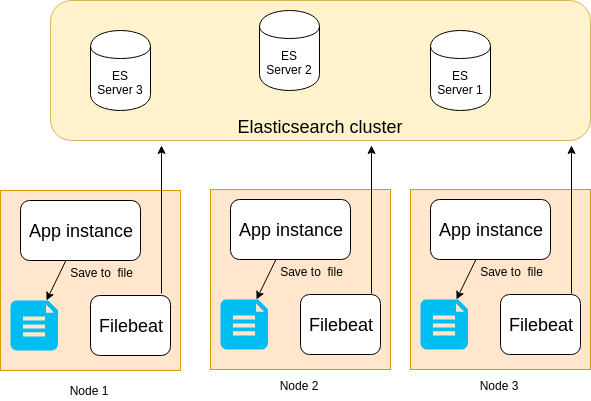

We will install Filebeat in our Nginx Docker image and let it ship the logs to our ELK stack. With this new setup, we will let our ELK stack persist the logs in another Docker machine.

ELASTIC SEARCH FILEBEATS UPDATE

Right now if we deploy an update with the scripts from the last post, the Nginx logs will be lost since we created new Docker machines. I added this to my /usr/share/filebeat/module/system/syslog/ingest/pipeline.json file as the first pattern in the ‘patterns’ array.In this post, I will go over setting up an ELK stack (Elasticsearch, Logstash, and Kibana) with the setup we've been working on throughout these posts. Also, after several days of working on this, I didn’t want to mess with what I had working. That’s why you see ‘BACULA_DEVICE’ as one of my patterns. A useful resource for this was this grok constructor, which helps out with the weird built-in patterns.

ELASTIC SEARCH FILEBEATS HOW TO

I wasn’t sure how to skip fields that were variable, and I didn’t want to write some complex regex, so I stored them in fields called data_x, and I kludged lots of it together since all I wanted were the IP and port data. To do this, grok is applying a parsing string that looks like: % Which does not really help me turn it into structured data. It’s all one line, and it has a specific format that I wanted to parse out. Once that was done, since I already had remote logging set up, my firewall logs flowed to my internal server. By default, pfSense blocks all incoming traffic, but does not log it. Turn on Logging of the Default Block Rule in pfSenseįirst off, I had to enable the firewall to log the requests it was blocking. Then Filebeat needs to read and parse the firewall log. Therefore, I ship the logs to an internal CentOS server where filebeat is installed.

FreeBSD does have one, but that would involve adding more stuff to my router that’s not part of the pfSense ecosystem, which would be a headache later on. There is no filebeat package that is distributed as part of pfSense, however. The way I needed the data to flow is shown in the flowchart below. By default, Filebeat installs several dashboards that I used as inspiration, and saw what could be done, so I set out to imitate them. As part of my project to create a Kibana dashboard to visualize my external threats, I decided I wanted a map view of where the IP addresses were coming from with geoip data.

0 kommentar(er)

0 kommentar(er)